How to Fix Crawlability Problems on Your Website

If you had researched high-value target keywords and created relevant content, but didn't show up in Google's search results, it could be crawlability problems. These are the common technical SEO issues search engine spiders encounter when crawling the site.

Table of content

What Are Crawlability Problems?

In general, crawlability refers to a search engine's ability to discover and navigate through the pages on your website. Search engines use bots, known as crawlers or spiders, to explore websites by following links and indexing content.

If certain pages are blocked, they won't be indexed, so they won't appear in search results, and it is called crawlability problems.

Common crawlability problems include nofollow links, redirect loops (when two pages redirect to each other to create infinite loops), bad site structure, and slow site speed.

Say goodbye to slow website!

WP Speed of Light comes with a powerful static cache system, and includes, a resource group and minification tool, a database cleanup system, a .htaccess optimization tool and an automatic cache cleaner.

How to Fix Crawlability Problems?

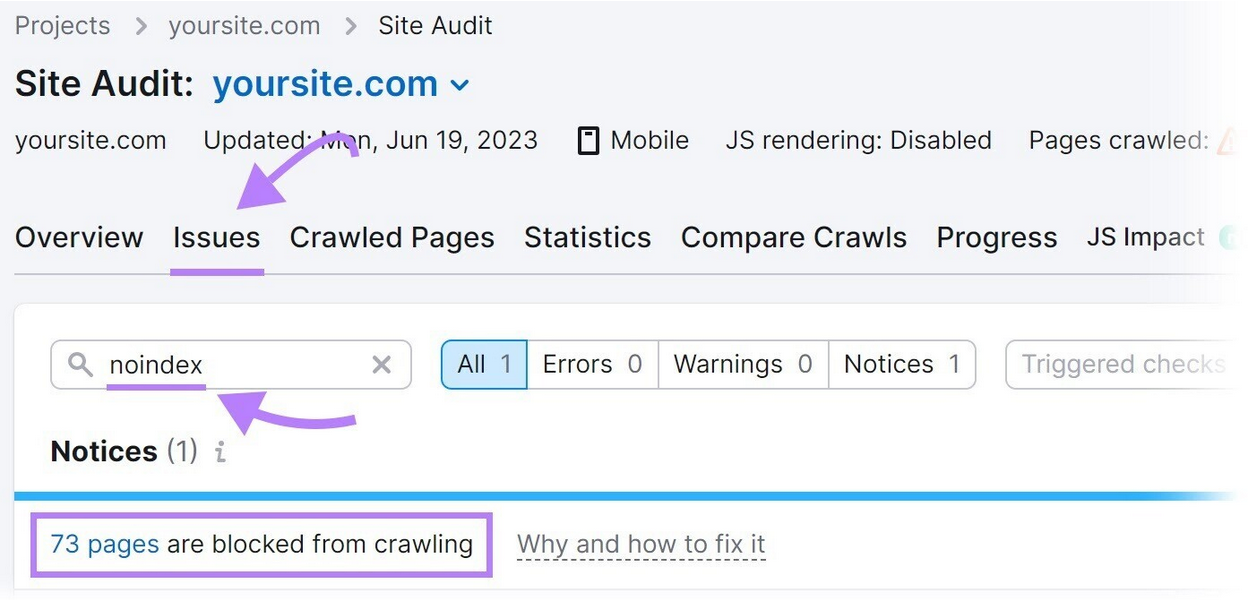

1. Noindex Tags

If the search engine stops indexing your website for a long time, Google may eventually stop crawling your page. In this case, Google considers no index tags as nofollow tags. But don't worry, there are the solutions.

Firstly, use noindex tags on pages you truly don't want to be indexed, like login pages, thank you pages, or duplicate content. Secondly, review your noindex tags regularly, followed by using crawl tools to identify nonindex issues. This can help you find and remove unnecessary noindex tags.

2. Broken Links (404 Errors)

Broken links can hamper crawling and prevent search engines from accessing your content. This can lead to reduced visibility in search results.

Do regularly check and update your site to remove or replace outdated URLs. When you find broken links, fix them immediately by updating the link or removing it. Minimize unnecessary redirects and update internal links to reflect your website's structure.

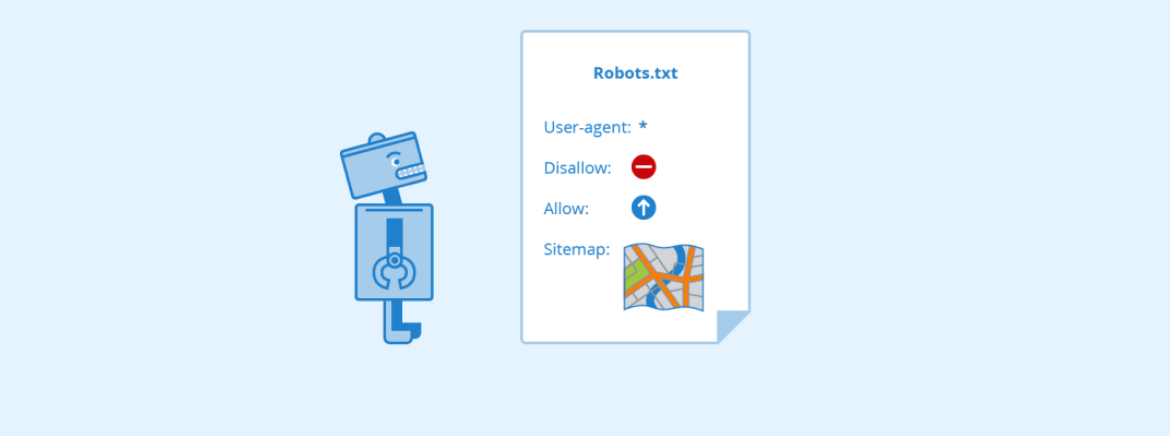

3. Crawlability Problems Related to robots.txt

One of the most common crawlability problems is related to Robots.text. This issue can hinder the indexing of your content.

To resolve this issue, start by examining your website's robots.txt file. Ensure important pages and folders are not blocked. Use Google's Robots.txt tester in Google Search Console to help you identify and test issues with your robots.txt file.

If necessary, modify your robot.txt file to allow search engines to crawl important pages and directories. Keep an eye as your website changes.

4. Slow Page Load Speed

The slow page load time frustrates search engine crawlers and may not index your content efficiently, so make sure you fix it right away!

You can try reducing image file sizes without compromising quality to speed up loading. Also, use a content delivery network (CDN) to distribute content closer to users and reduce latency.

Moreover, server optimization is the key to enhancing server performance by reducing server response times and using reliable hosting.

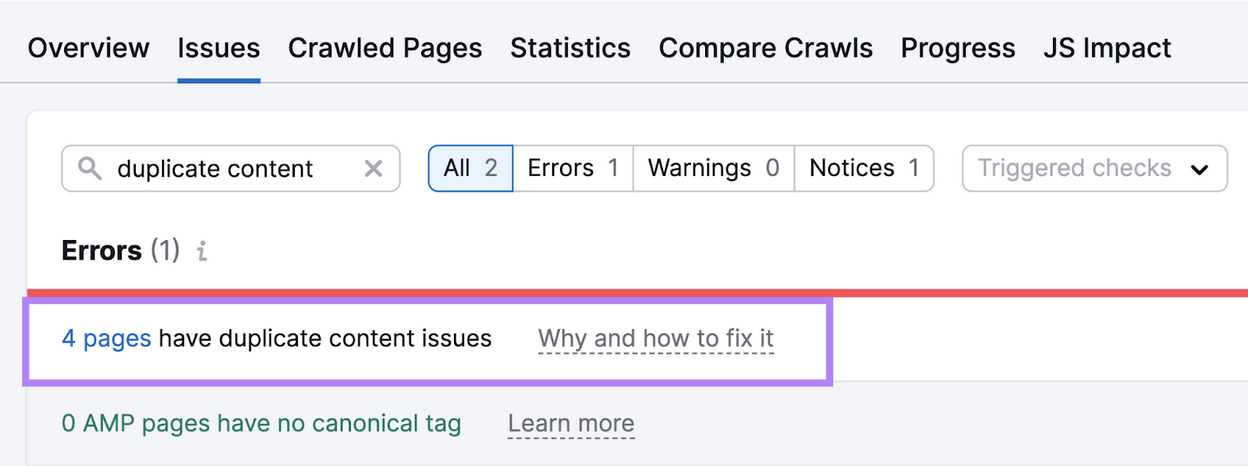

5. Duplicate Content

When search engines find identical or similar content on multiple pages, they may not know which version to index. Ensuring your website offers a clear and unique content landscape is crucial.

To fix it, use canonical tags to indicate the primary version of the page. Organize your ULRs logically and consistently. Additionally, regularly produce unique and high-quality content. Merge duplicate pages or use 301 redirects to consolidate them.

6. Related to XML Sitemap Error

Generally, an XML sitemap guides search engines in locating and understanding your website's content. Errors in the sitemap can lead to incomplete indexing and lower visibility in search results.

You need to review to spot errors or inconsistencies. After that, ensure your XML sitemap reflects your current website structure and content.

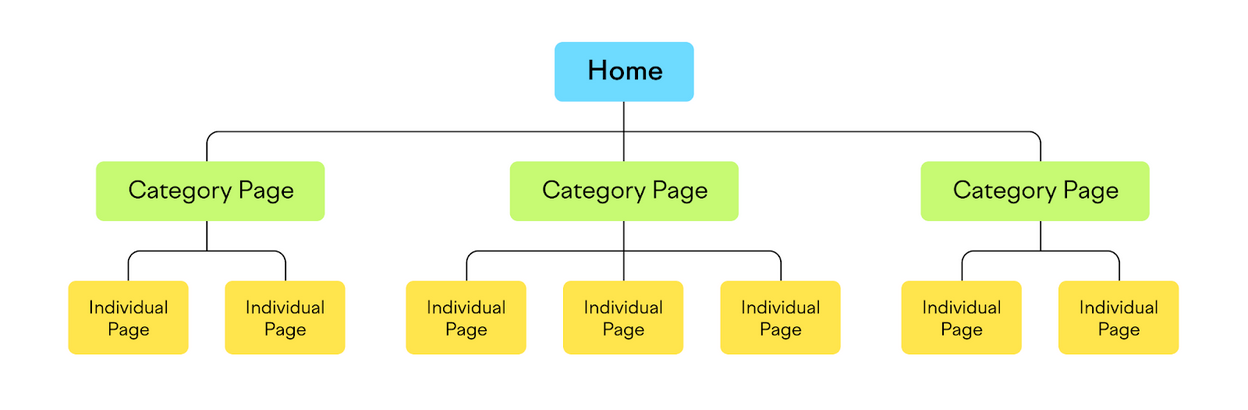

7. Poor Website Architecture

The website structure might be one cause of crawlability problems. Thus, fixing poor website architecture is crucial, so the search bots can find your content and appear on search results.

If you want to fix this problem, ensure to avoid inconsistent hierarchy and categorizing and linking your pages which can confuse search engine crawlers.

So, create a clear hierarchy and organize your content into logical categories and subcategories. Then, link them together in a way that reflects that hierarchy.

8. Mobile Usability

Mobile usability has been a key priority for SEO. That's why, if the site is deemed unusable for mobile devices, Google may rank them lower in the search results.

Test your key landing pages in the Google Mobile Friendly Tester tool and monitor the issues within Google Search Console. Moreover, review the output and ensure the site's content appears.

9. Rendering Issues

Google's ability to render JavaScript is improving. Although progressive enhancement is still the recommended method, it's useful to fully render pages the way Google does to experience what a searcher would find on the page.

If the "rendered" version does not contain the vital content on the page then there is likely a problem to address. This should also match the cached version of a page. After that, analyze the results of a JS-rendered crawl.

10. Thin Content

If your site doesn't have any of the issues above but still isn't indexed, you may have "thin content" or low-value content. To fix it, analyze the site's content that is not indexed by Google. And review the target queries for the page. Additionally, refresh the content or create new content based on keyword research and search intent to provide better value.

WP Meta SEO gives you the control over all your SEO optimization. Bulk SEO content and image SEO, on page content check, 404 and redirect.

Conclusion

That's how to fix the crawlability problem, by identifying and resolving these common issues. If you do it, you can enhance your website's performance in search results, attract more organic traffic, and maintain SEO health.

Don't forget to regularly monitor and proactive fixes will keep your site accessible to both search engines and users alike.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.

Comments